Sign Up Form

Join over 2,500 top life science marketing directors who receive weekly digital marketing tips.

SEO Sign Up Form Mobile

Join over 2,500 top life science marketing directors who receive weekly digital marketing tips.

ACCESSIBILITY & INDEXATION

Accessibility and indexation is an extremely important factor especially for life science companies with large catalogues of products. Often times because there are so many unique genes and products referencing product families, web developers opt to place the entire product catalogue onto a database. Therefore, when a customer searches through the website, they can find all of the database products easily in one place. Executives are happy and web developers are able to charge less for this type of product catalogue. However, the major area overlooked is when it comes to search engines. Search engines may not be able to index your product database search results without specific parent category landing pages! In the past few years, Google has taken a harder stance against indexing search results because allowing site search to be indexed can result in a ton of duplicate content on your site. Many companies intentionally noindex search results, and then create parent category pages on the site for better indexing. Additionally, if you plan to run paid traffic to any of these pages, you won’t be able to run it to a dynamically generated search listing page. Instead, you will need a parent category landing page that lists the unique SKUs of your product. For example, the parent category page would be ‘gene family’ and child products could reference different SKUs, sizes, fresh/frozen and other formats of your product

38. Check the robots.txt.

Has the entire site, or any important content been blocked? Is link equity being orphaned due to pages being blocked via the robots.txt?

39. Turn off JavaScript, cookies, and CSS.

- Install the Web Developer Toolbar for your browser.

- After turning off JS, cookies and CSS, is the content still there?

- Do the navigation links work?

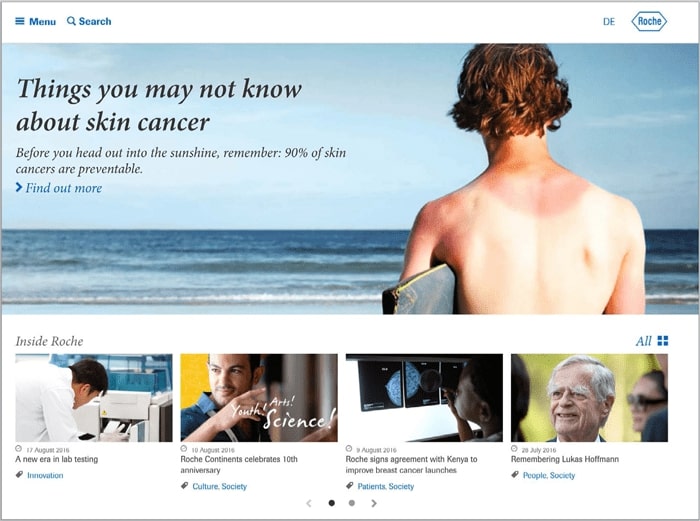

Fig 18. Roche’s full website with JS and CSS enabled.

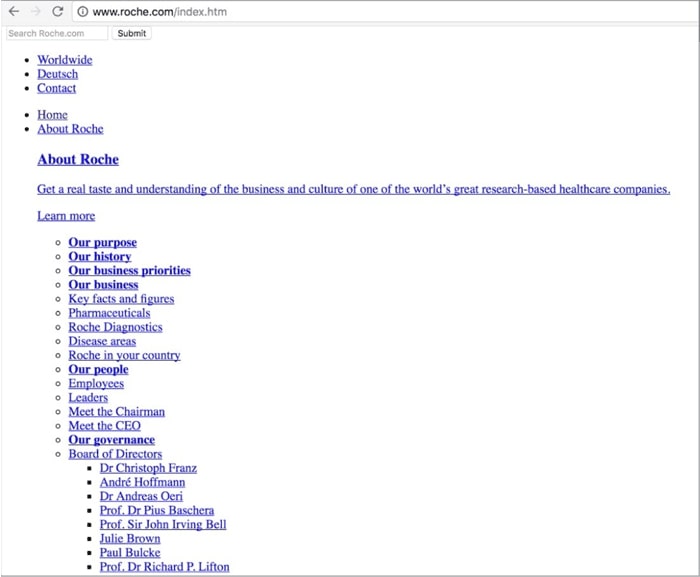

Fig 19. Roche’s website with JS and CSS disabled. The links and content still exist normally and are crawl-able by search engines.

40. Now change your user agent to Googlebot

- Use the User Agent Add-on

- Are they cloaking? Cloaking is a search engine optimization (SEO) technique in which the content presented to the search engine spider is different from that presented to the user’s browser. This is done by delivering content based on the IP addresses or the User-Agent HTTP header of the user requesting the page

- Does it look the same as before?

41. Check your Moz crawl report for 4xx errors or 5xx errors and resolve them.

42. Are XML sitemaps listed in the robots.txt file?

43. Are XML sitemaps submitted to Google/Bing Webmaster Tools?

44. Are pages accidentally being tagged with the meta robots noindex command?

This will prevent search engines from accessing noindex pages.

45. Are there pages that should have the noindex command applied?

Landing pages that you’ve created specifically for certain campaigns, or duplicates should be no-indexed by search engines.

46. Do goal pages have the noindex command applied?

This is important to prevent direct organic visits from showing up as goals in analytics. If your goal pages are showing up on Google organically, it will skew your conversion data metrics.